Future Trends of Artificial Intelligence Using Big Data

Artificial Intelligence (AI) is the simulation of human intelligence in machines, enabling them to perform tasks that typically require human cognitive abilities, such as learning, reasoning, problem-solving, perception, and decision-making. AI systems utilize algorithms and computational models to process data, identify patterns, and make predictions or decisions autonomously. These systems range from basic rule-based programs to advanced machine learning and deep learning models that continuously improve their performance by learning from data.

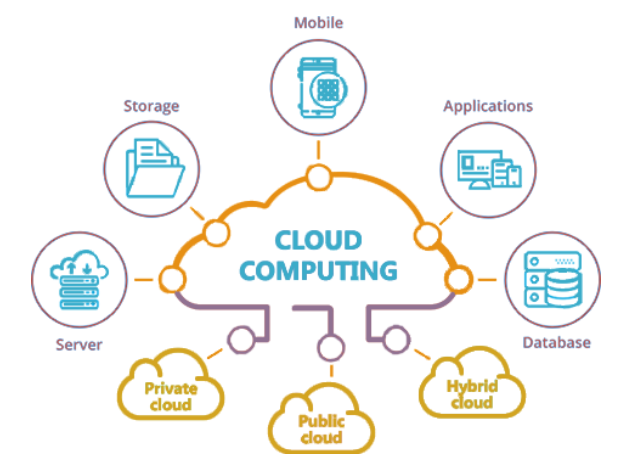

AI using Big Data involves the integration of sophisticated AI algorithms with massive and complex datasets, allowing systems to learn, adapt, and make intelligent decisions. By analyzing vast amounts of structured and unstructured data such as text, images, sensor inputs, and transactional records, AI models can identify patterns, predict outcomes, and automate tasks with remarkable accuracy. For instance, machine learning and deep learning techniques process Big Data to enable personalized recommendations in e-commerce, real-time fraud detection in finance, predictive maintenance in manufacturing, and disease diagnosis in healthcare. Big Data serves as the foundation for training robust AI systems, while AI transforms raw data into actionable insights. This synergy drives innovation across industries, though it also necessitates robust data infrastructure, ethical governance, and advanced tools like cloud computing and neural networks to address challenges related to scalability, privacy, and computational demands. Ultimately, AI powered by Big Data is reshaping how organizations operate, innovate, and deliver value in a data-driven world.

Advanced AI Models and Algorithms

Sophistication through Scale in AI models, such as transformers and generative adversarial networks (GANs), achieve greater accuracy and capabilities by leveraging larger datasets. This trend enables breakthroughs in natural language processing (NLP), computer vision, and generative AI. The concept of sophistication through scale refers to the significant improvement in AI system performance as they are trained on increasingly larger datasets and scaled up in terms of computational resources and model complexity. This is made possible by the availability of massive datasets (Big Data) and advancements in hardware (e.g., GPUs, TPUs) and algorithms (e.g., deep learning, transformers). For example, large language models like GPT-4 or Gemini demonstrate exceptional accuracy and versatility by training on extensive text corpora, enabling tasks such as language translation, content generation, and natural language understanding. Similarly, computer vision models trained on millions of images achieve human-level performance in object recognition and image classification.

Real-Time Processing systems are increasingly integrated with streaming data platforms like Apache Kafka to enable instant decision-making in applications such as fraud detection, autonomous systems, and the Internet of Things (IoT). Real-time processing in AI refers to the ability of systems to analyze and act on data as it is generated, ensuring immediate responses. This capability is crucial in fields like autonomous driving, healthcare monitoring, and industrial automation, where delays are unacceptable. Real-time AI relies on high-speed data processing frameworks (e.g., Apache Kafka, Apache Flink) and edge computing to minimize latency, delivering insights or actions within milliseconds. For instance, autonomous vehicles process sensor data in real time to navigate and avoid obstacles, while financial systems detect fraudulent transactions as they occur. Real-time processing combines streaming data with advanced machine learning models, enabling dynamic adaptation to changing conditions. However, it requires robust infrastructure, efficient algorithms, and low-latency networks to handle the volume, velocity, and variety of data.

Edge AI and Decentralized Learning

Edge AI and decentralized learning are transforming data processing and analysis by bringing computation closer to the source of data generation, rather than relying solely on centralized cloud systems. Edge AI involves deploying AI models directly on devices like smartphones, IoT sensors, and autonomous vehicles, enabling real-time decision-making with minimal latency. This is particularly critical for applications such as smart cities, industrial automation, and healthcare monitoring, where immediate responses are essential. Decentralized learning, including techniques like federated learning, allows AI models to be trained across multiple devices or servers without transferring raw data to a central location. This approach enhances data privacy, reduces bandwidth usage, and enables collaboration across organizations while complying with regulations like GDPR. For example, hospitals can collaboratively train AI models on patient data without sharing sensitive information. Together, Edge AI and decentralized learning address key challenges in Big Data, such as scalability, latency, and privacy, enabling more efficient and secure AI deployments.

Industry-Specific Transformations

AI using Big Data is revolutionizing industries by enabling intelligent decision-making and automation through the analysis of vast datasets. In healthcare, AI analyzes electronic health records, genomic data, and medical imaging to enable personalized treatments and early disease detection. In finance, Big Data powers fraud detection, risk assessment, and algorithmic trading by processing real-time transaction data and market trends. Retail benefits from AI-driven demand forecasting, inventory optimization, and hyper-personalized customer experiences using purchase history and behavioral data. In manufacturing, predictive maintenance and supply chain optimization are achieved through sensor data and IoT integration, reducing downtime and costs. Transportation sees advancements in route optimization, autonomous vehicles, and traffic management by analyzing GPS, weather, and traffic data. Meanwhile, the energy sector uses AI to optimize grid management and renewable energy forecasting by processing data from smart meters and environmental sensors.

Data Management Innovations

Data management innovations in AI using Big Data are transforming how organizations collect, store, process, and utilize vast amounts of data to power artificial intelligence systems. These innovations address challenges related to scalability, complexity, and efficiency in handling Big Data, enabling AI models to deliver more accurate and actionable insights.

Quantum AI and High-Performance Computing

Quantum AI and high-performance computing (HPC) represent the next frontier in computational power, enabling the processing of complex problems at unprecedented speeds. Quantum AI leverages principles of quantum mechanics, such as superposition and entanglement, to perform computations that are infeasible for classical computers. This has the potential to revolutionize AI by solving optimization problems, simulating molecular structures, and enhancing machine learning algorithms exponentially faster. For example, quantum algorithms like Grover's and Shor's could dramatically accelerate tasks such as drug discovery, cryptography, and large-scale data analysis. Meanwhile, HPC complements AI by providing the massive parallel processing capabilities needed to train large-scale models and analyze vast datasets. HPC systems, equipped with GPUs and TPUs, are already powering breakthroughs in deep learning, natural language processing, and climate modeling.

Multimodal and Autonomous Systems

Multimodal and autonomous systems represent a cutting-edge frontier where AI systems integrate and process multiple types of data—such as text, images, audio, and sensor inputs—to achieve a more comprehensive understanding of complex environments. These systems leverage Big Data to train models that can simultaneously interpret and correlate diverse data streams, enabling richer insights and more human-like decision-making. For example, autonomous vehicles combine visual data from cameras, LiDAR, and radar with real-time traffic information to navigate safely, while healthcare systems analyze medical images, patient records, and genomic data to provide personalized diagnoses.

By 2030, AI powered by Big Data will drive unprecedented efficiency and innovation. However, its societal impact will depend on addressing ethical, technical, and accessibility challenges. Cross-sector collaboration and investment in education will be key to democratizing the benefits of AI.

.jpg)